How to use spark.driver.maxResultSize in Spark or PySpark? Often in Spark, we see some out-of-memory exceptions due to the serialized result of all partitions being bigger than the spark driver max result size. Let us see what is the reason behind this error and how to resolve it by using the spark.driver.maxResultSize.

Table of contents

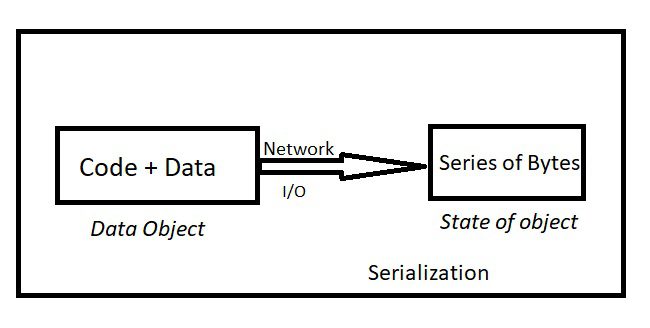

1. What is serialization?

Serialization is the processing of converting data objects into a series of Bytes during transferring across the network. In our Spark scenario, the data transfer across executors or also between driver and executor happens with serialized data.

2. What is spark.driver.maxResultSize?

As per the official Spark documentation, spark.driver.maxResultSize defines the maximum limit of the total size of the serialized result that a driver can store for each Spark collect action (data in bytes). Sometimes this property also helps in the performance tuning of Spark Application. For more details on this property refer to Spark Configuration.

Note:

- The default value would be 1Gb, whereas the minimum value should be 1Mb, and the maximum would be 0 (meaning unlimited).

- If the size of the serialized result sent to the driver is greater than its max result size then the spark job fails with memory exceptions.

- It is always better to have a proper limit to protect the driver from out-of-memory errors.

3. View and set driver maxResultSize.

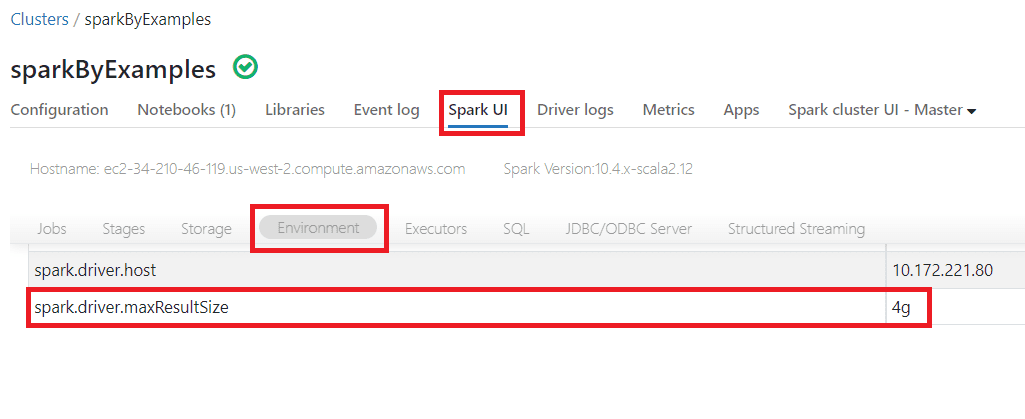

In our demo, we are using Databricks to showcase the driver memory details and set its value. Defining the limit for driver max result size is possible while creating the cluster in databricks or by updating the configuration of the spark session.

We can always find the available spark.driver.maxResultSize either under

- Spark UI

- SparkSession Configuration.

3.1. Spark-UI

In the cluster menu, we have a Spark UI that holds the details of the jobs, stages, storage, Environment e.t.c… Under the Environment tab, we found the limit of the spark.driver.maxResultSize. In our case, it is 4g as shown above.

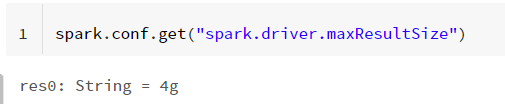

3.2. Using Spark Configuration

We can leverage the spark configuration get command as shown below to find out the spark.driver.maxResultSize that is defined during the spark session or cluster creation.

3.3. Set up spark.driver.maxResultSize

We can pass the spark driver max result size into the spark session configuration using the below command. Here we provided its limit in GB.

spark.conf.set("spark.driver.maxResultSize", "8g")

4. Conclusion

Configuring the right spark.driver.maxResultSize for the spark driver is always a best practice for performance tuning. This helps to reduce the memory exception we face when in case the size of the serialized result is unexpected and smoother job processing.

Related Articles

- What does setMaster(local[*]) mean in Spark

- Spark Deploy Modes – Client vs Cluster Explained

- Spark Web UI – Understanding Spark Execution

- Time Travel with Delta Tables in Databricks?

- What is Apache Spark Driver?

- SOLVED Can’t assign requested address: Service ‘sparkDriver’

- Spark Set JVM Options to Driver & Executors