In this article, I will explain how to setup and run the PySpark application on the Spyder IDE. Spyder IDE is a popular tool to write and run Python applications and you can use this tool to run PySpark application during the development phase.

Install Java 8 or later version

PySpark uses Py4J library which is a Java library that integrates python to dynamically interface with JVM objects when running the PySpark application. Hence, you would need Java to be installed. Download the Java 8 or later version from Oracle and install it on your system.

Post installation, set JAVA_HOME and PATH variable.

JAVA_HOME = C:\Program Files\Java\jdk1.8.0_201

PATH = %PATH%;C:\Program Files\Java\jdk1.8.0_201\bin

Install Apache Spark

Download Apache spark by accessing Spark Download page and select the link from “Download Spark (point 3)”. If you wanted to use a different version of Spark & Hadoop, select the one you wanted from drop downs and the link on point 3 changes to the selected version and provides you with an updated link to download.

After download, untar the binary using 7zip and copy the underlying folder spark-3.0.0-bin-hadoop2.7 to c:\apps

Now set the following environment variables.

SPARK_HOME = C:\apps\spark-3.0.0-bin-hadoop2.7

HADOOP_HOME = C:\apps\spark-3.0.0-bin-hadoop2.7

PATH=%PATH%;C:\apps\spark-3.0.0-bin-hadoop2.7\bin

Setup winutils.exe

Download wunutils.exe file from winutils, and copy it to %SPARK_HOME%\bin folder. Winutils are different for each Hadoop version hence download the right version from https://github.com/steveloughran/winutils

PySpark shell

Now open command prompt and type pyspark command to run PySpark shell. You should see something like below.

Spark-shell also creates a Spark context web UI and by default, it can access from http://localhost:4041.

Run PySpark application from Spyder IDE

To write PySpark applications, you would need an IDE, there are 10’s of IDE to work with and I choose to use Spyder IDE. If you have not installed Spyder IDE along with Anaconda distribution, install these before you proceed.

Now, set the following environment variable.

PYTHONPATH => %SPARK_HOME%/python;$SPARK_HOME/python/lib/py4j-0.10.9-src.zip;%PYTHONPATH%

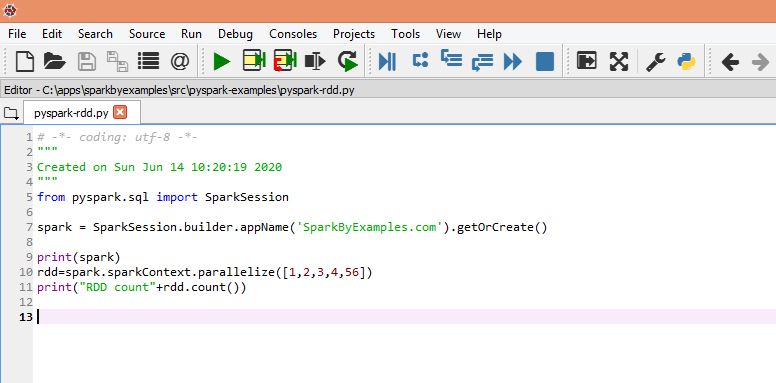

Now open Spyder IDE and create a new file with below simple PySpark program and run it. You should see 5 in output.

Happy Learning !!