PySpark reduceByKey() transformation is used to merge the values of each key using an associative reduce function on PySpark RDD. It is a wider transformation as it shuffles data across multiple partitions and It operates on pair RDD (key/value pair).

When reduceByKey() performs, the output will be partitioned by either numPartitions or the default parallelism level. The Default partitioner is hash-partition.

First, let’s create an RDD from the list.

data = [('Project', 1),

('Gutenberg’s', 1),

('Alice’s', 1),

('Adventures', 1),

('in', 1),

('Wonderland', 1),

('Project', 1),

('Gutenberg’s', 1),

('Adventures', 1),

('in', 1),

('Wonderland', 1),

('Project', 1),

('Gutenberg’s', 1)]

rdd=spark.sparkContext.parallelize(data)

As you see the data here, it’s in key/value pair. Key is the work name and value is the count.

reduceByKey() Syntax

reduceByKey(func, numPartitions=None, partitionFunc=)

reduceByKey() Example

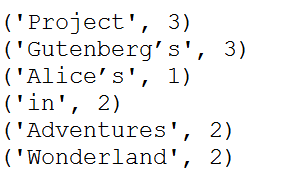

In our example, we use PySpark reduceByKey() to reduces the word string by applying the sum function on value. The result of our RDD contains unique words and their count.

rdd2=rdd.reduceByKey(lambda a,b: a+b)

for element in rdd2.collect():

print(element)

This yields below output.

Complete PySpark reduceByKey() example

Below is complete RDD example of PySpark reduceByKey() transformation.

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName('SparkByExamples.com').getOrCreate()

data = [('Project', 1),

('Gutenberg’s', 1),

('Alice’s', 1),

('Adventures', 1),

('in', 1),

('Wonderland', 1),

('Project', 1),

('Gutenberg’s', 1),

('Adventures', 1),

('in', 1),

('Wonderland', 1),

('Project', 1),

('Gutenberg’s', 1)]

rdd=spark.sparkContext.parallelize(data)

rdd2=rdd.reduceByKey(lambda a,b: a+b)

for element in rdd2.collect():

print(element)

In conclusion, you have learned PySpark reduceByKey() transformation is used to merge the values of each key using an associative reduce function and learned it is a wider transformation that shuffles the data across RDD partitions.

Happy Learning !!